Basics of Docker

Docker was first release in March 2013. It is developed by Soloman Hykes and Sebastian Pahl

Docker is set of platform as service that uses O.S level virtualization whereas VMware uses hardware level virtualization.

Docker is an open-source Centralised Platform designed to create, deploy and run application.

Docker uses Container on the host O.S to run applications. It allows applications to use the same linux Kernel as a system on the host computer, rather than creating a whole virtual O.S.

We can install docker on any O.S. but Docker engine runs natively on Linux distribution.

Docker written in 'go' language.

Docker is a tool that performs O.S level Virtualization, also known as Containerization.

Before Docker, many users faces the problem that a particular code is running in the developer's system but not in user's system

Advantage of Docker

No pre- allocation of RAM

Continious Integration efficiency ->Docker enables you to build a container image and use that same image across every step of the deployment process.

Less Cost

Light in weight

It can run on physical hardware, virtual hardware or on cloud.

You can re-use the image.

It took very less time to create the container.

Disadvantage of Docker

Docker is not a good solution for the application that requires a rich GUI.

Difficult to manage large number of containers.

Docker does not provide cross platform compatibility means if a application is designed to run in docker container on windows, then it can't run on Linux or vice-versa.

Docker is suitable when the development O.S and testing O.S are the same, If the O.S is different we should use Virtual Machine .

No solution for data recovery and backup

Note: When Image is running we can say container, when we send a container, or non runnable sate we can say image.

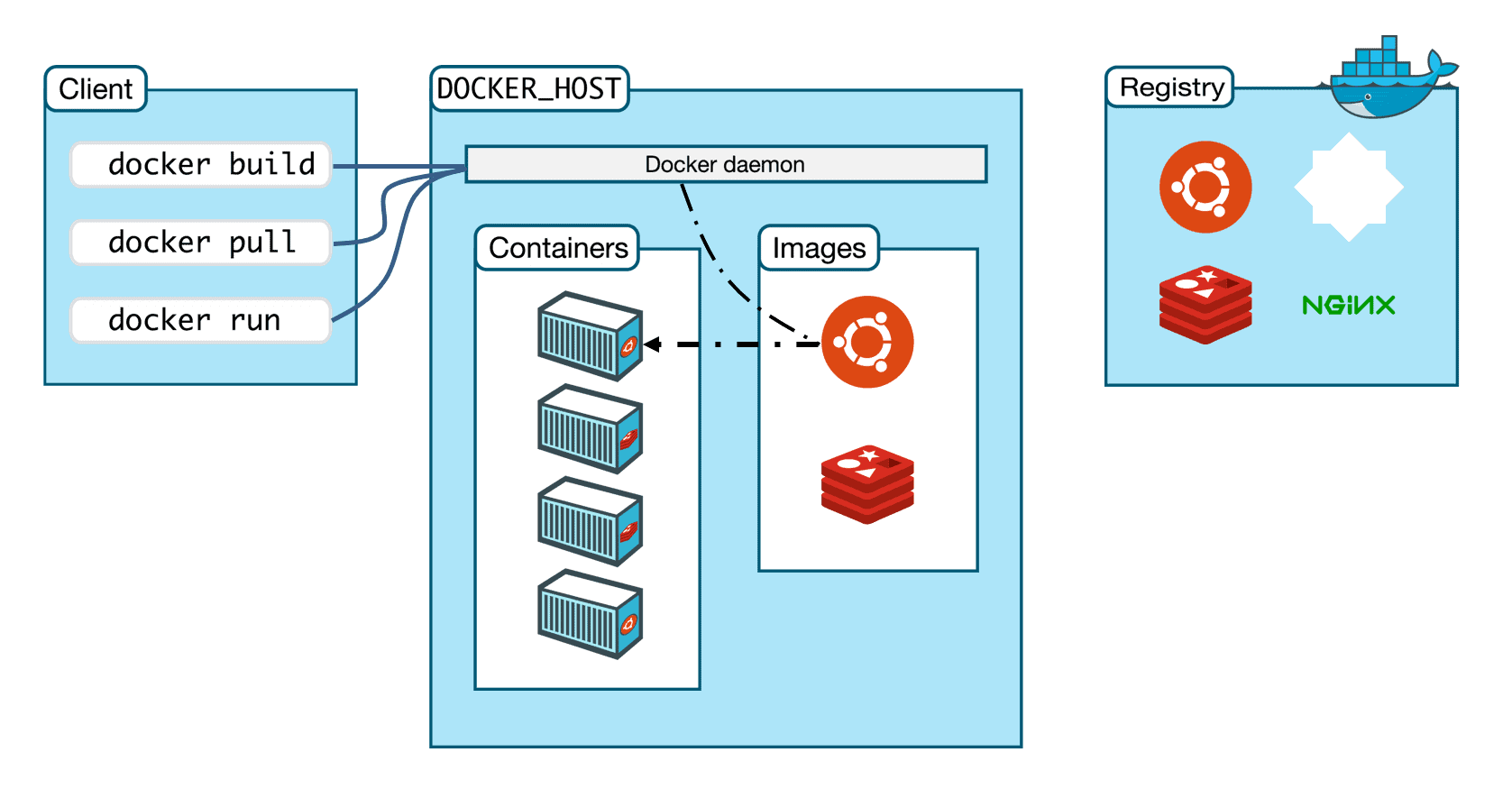

Architecture of Docker

Components of Docker

Docker Daemon

Docker daemon runs on the host O.S.

It is responsible for running container and managing docker services.

Docker daemon can communicate with other daemons.

Docker Client

Docker users can interact with with the docker daemon through a client.

Docker client uses command and Rest API to communicate with the docker daemon.

When a client runs any server command on the docker client terminal, the client terminal sends the docker commands to the docker daemon.

Docker clients can communicate with more than one daemon.

Docker Host

- Docker Host is used to providing an environment to execute and run applications. It contains the docker daemon, images, containers, network and storage.

Docker Hub/Registry

Docker registry manages and stores the docker images.

There are two types of registries in the docker-

Public Registry: Public registry also called Docker Hub.

Private Registry: It is used to share images within the enterprise

Docker Images

Docker images are the read-only binary templates used to create docker containers.or, a single file with all dependencies and configurations required to run a program.

Ways to create an image-

Take an image from Docker Hub.

Create an image from Docker File.

Create an image from existing docker containers.

Docker Container

The container holds the entire package that is needed to run the application. or in other words, we can say that the image is a template and the container is a copy of that template.

Container is like virtualization when they run on the docker engine.

Images becomes containers when they run on the docker engine.

Docker installation and important commands

Create a machine on AWS with Docker installed AMI, and install Docker if not installed. yum install docker

To see all images present in your local . docker images

To find out images in the docker hub. docker search <image_name>

To download an image from docker-hub to a local machine. docker pull <image_name>

To give a name to the container and run. docker run -it --name <container_name><image_name>/bin/bash [-it -> interactive mode and direct to terminal]

To check, whether the service is starting or not. service docker status or, service docker info

To start the service. service docker start

To start/stop the container. docker start <container_name> or, docker stop <container_name>

To go inside the containers. docker ps -a

To see only running containers. docker ps

To stop the container. docker stop <container_name>

To delete the container. docker rm <container_name>

To exit from docker container. exit

To delete images. docker image rm <image_name>

Docker image Creation

Docker image creation using existing docker file

We have to create a container from our image, therefore create one container first- docker run -it --name <container_name> <image_name> /bin/bash

Go to tmp directory- cd tmp/

Now create one file inside this tmp directory - touch myfile

Now if we want to see the difference between the base image & changes on it then- docker diff <old_container_name> [D-> Deletion, C-> Change, A->Append/addition]

Now create an image of this container- docker commit <container_name> <container_image_name>

To see the images- docker images

Now create a container from this image.- docker run -it --name <container_name> <update_image_name> /bin/bash--> ls-->cd tmp/-->lso/p-->myfile[you will get all files back]

Docker image creation by creating Dockerfile

Dockerfile

Dockerfile is a text file it contains some set of instruction

Automation of docker image creation

FROM

- For the base image. This command must be on top of the docker file.

RUN

- To execute commands, it will create layer in the image.

MAINTAINER

- Author/Owener/Description

COPY

- Copy files from the local system(docker VM) we need to provide a source, and destination.(We can’t download the file from the Internet and any remote repo)

ADD

- Similar to copy, it provides a feature to download files from the internet, also we extract the file from the docker image side.

EXPOSE

- To expose port

WORKDIR

- To set a working directory for a container.

CMD

- Execute commands but during container creation

ENTRYPOINT

- Similar to CMD, but has higher priority over CMD, the first commands will be executed by ENTRYPOINT only.

ENV

- Environment variable

ARG

- To define the name of a parameter and its default value, the difference between ENV and ARG is that after you set on env. using ARG, you will not be able to access that late on when you try to run the Docker container.

Creation of Dockerfile

Create a file named Dockerfile: vi Dockerfile

Add instruction in Dockerfile: FROM ubuntu RUN echo"Creating our image" > /tmp/testfile

Build Dockerfile to create an image : docker build -t <image_name>

Run image to create the container: docker run -it --name <container_name> <image_name> /bin/bash

Docker Volume

Volume is simply directory inside our container.

Firstly, we have to declare this directory as a volume then share the volume.

Even if we stop the container, still we can access volume.

The volume will be created in one container.

You can declare a directory as volume only while creating the container.

You can't create volume from the existing container.

You can share one volume across any number of containers.

The volume will not be included, when you update an image.

We can map volume two ways-

Container <--> Container

Host <--> Container

Benefits of Volume

Decoupling container from storage.

Share volume among different containers.

Attach the volume to containers.

On deleting the container, the volume does not delete.

Creating volume from Dockerfile

Create a Dockerfile and write

FROM ubuntu

VOLUME ["/myvolume1"]

Then create an image from this Dockerfile.

docker build -t <image_name>

Now create a container from this image & run

docker run -it --name <container_name> <image_name> /bin/bash

Now do ls, you can see myvolume1

Now share volume with another container [container <--> container]

docker run -it --name <container_name> --privileged=true --volumes-from <container_name> <os_image_name> /bin/bash

Now after creating container2, myvolume1 is visible, whatever you do in one volume, can be seen from another volume.

Create volume by using the command

Create volume by using the command

docker run -it --name <container_name> -v /<volume_name> <os_name> /bin/bash

Then do ls and change directory to your volume

now create one more container and save volume

docker run -it --name <container_name> --privileged=true --volumes-from <container_name> <os_image_name> /bin/bash

Now you are inside container, do ls, and you can see your volume

Now create one file inside this volume and then check in containers, you can see that file.

Volumes[Host<-->Container]

verify files in /home/ec2-user

create volumes in host and container and mapped them

docker run -it --name <container_name> -v /home/ec2-user:/<volume_name> --privileged=true <os_name> /bin/bash

check your volume--> cd /<volume_name> [Do ls, now you can see all files of host machine]--> touch <file_name>--> exit

Now check in ec2, you can see the files.

Some other commands

To see all created volumes : docker volume ls

To create docker volume(normal): docker volume create <volume_name>

To delete volume: docker volume rm <volume_name>

To remove all unused docker volumes: docker volume prune

To get volume details: docker volume inspect <volume_name>

To get container details: docker container inspect<container_name>

Some other important terms

The difference between docker attach and docker exec

Docker exec creates a new process in the container's environment while docker attaches just connect the standard I/O of the main process inside the container to the corresponding standard I/O error of the current terminal.

docker exec is especially for running new things in an already started container, be it a shell or some other process.

The difference between expose and publish a docker

We have three options:

Neither specifies expose nor -p

Only specify expose

Specify expose and -p

Explain

If we specify neither expose nor -p the service in the container will only be accessible from inside the container itself.

If we expose a port, the service in the container is not accessible from outside docker, but from inside other docker containers, so this good for inter-container communication .

If we expose and -p a port, the service in the container is accessible from anywhere, even outside docker.

Note: If we do -p but do not expose, docker does an implicit expose. this is because, if a port is open to the public, it is automatically also open to the other docker containers.